EMO Robot: The Art of Humanoid Lip Synchronization

I have to admit, one of the things that has always bothered me about sci-fi movies—or even modern robotics demonstrations—is the disconnect between voice and face. You know what I mean; the audio says “I am happy,” but the robot’s face looks like a frozen mask with a flapping jaw. It triggers that uncomfortable “Uncanny Valley” feeling instantly.

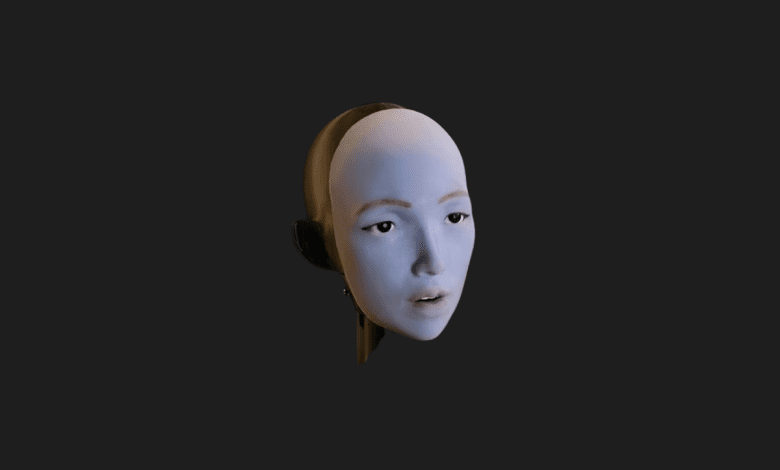

But recently, I came across a development from Columbia University that genuinely made me pause and rethink where we are heading. They’ve built a robot named EMO, and it’s doing something remarkably human: it is learning to speak by looking at itself in the mirror.

This isn’t just about moving a mouth; it’s about the subtle art of lip synchronization. As someone who follows every twitch and turn of the metaverse and robotics industry, I believe EMO represents a massive leap toward robots that we can actually connect with emotionally.

The Mirror Phase: Learning Like a Human

What fascinates me most about EMO isn’t just the hardware; it’s the learning process. The researchers, led by PhD student Yuhang Hu and Professor Hod Lipson, didn’t just program the robot with a database of “smile here” or “open mouth there” commands.

Instead, they treated EMO like a human infant.

- Self-Modeling: They placed the robot in front of a mirror.

- Babbling with Expressions: EMO spent hours making random faces, observing how its 26 internal motors (actuators) changed its reflection.

- The Feedback Loop: Through this visual feedback, the robot learned exactly which muscle twitch created which expression.

This approach is incredibly organic. It reminds me of the “Vision-Language-Action” (VLA) models we see in advanced AI. The robot isn’t following a script; it is building an internal map of its own physical capabilities.

Under the Hood: The Tech Behind the Smile

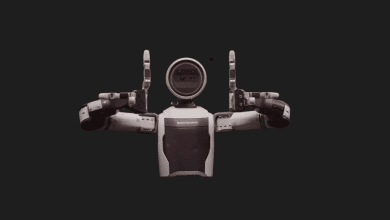

Let’s get a bit technical, but I’ll keep it simple. EMO isn’t just a rigid plastic head. To achieve realistic movement, the team covered the robotic skull with a soft, flexible silicone skin.

Beneath that skin lies a complex network of engineering:

- 26 Actuators: Think of these as facial muscles. They pull and push the silicone to mimic skin tension.

- Camera Eyes: High-resolution cameras in the pupils allow EMO to make eye contact and, crucially, to watch itself learn.

- Predictive AI: This is the secret sauce. EMO doesn’t just react to sound; it anticipates it.

Why Prediction Matters

When you and I talk, we shape our mouths milliseconds before the sound actually comes out. If a robot waits for the audio to start moving its lips, it already looks laggy and fake. EMO analyzes the audio stream and prepares its face slightly ahead of time, creating a much more natural, fluid conversation flow.

The YouTube Education

After EMO figured out how to control its own face in front of the mirror, it needed to learn how to speak. And where does everyone go to learn new skills these days? YouTube.

I found this part particularly relatable. The robot watched hours of videos of humans talking and singing. By analyzing these videos frame-by-frame, EMO learned the relationship between specific sounds (phonemes) and mouth shapes (visemes).

My Take: This self-supervised learning is scalable. It means we don’t need to manually animate every single word a robot says. We just feed it data, and it figures out the nuances of communication on its own.

The Current Limitations (The “B” and “W” Problem)

I value transparency in technology, so let’s not pretend EMO is perfect yet. Even the creators admit there are hurdles.

The robot currently struggles with sounds that require:

- Fully closing the lips (like the letter “B”).

- Complex rounding (like the letter “W”).

These are mechanically difficult movements to replicate with silicone and actuators because they require a seal. However, seeing how fast AI iterates, I suspect this is a temporary hardware hurdle rather than a software dead-end.

The Big Picture: Combining EMO with LLMs

Here is where my imagination starts to run wild. Imagine taking the brain of ChatGPT or Google Gemini and putting it inside EMO’s head.

Right now, we interact with AI via text or disembodied voices. But if you combine a Large Language Model (LLM) with a robot that can:

- Maintain eye contact,

- Smile at your jokes,

- Look concerned when you are sad,

- And lip-sync perfectly…

We are talking about a total paradigm shift in Human-Robot Interaction (HRI).

I can see this being revolutionary for telepresence. Imagine a metaverse avatar or a physical droid that represents you in a meeting, mimicking your exact facial expressions in real-time. Or consider the implications for elderly care—a companion robot that feels less like a machine and more like a friend because it communicates non-verbally just as well as it speaks.

Final Thoughts

The EMO robot is a testament to how far we’ve come from the “beep-boop” robots of the past. By moving away from rigid programming and embracing self-learning through observation, Columbia University has brought us one step closer to androids that don’t just exist in our world, but actually understand how to inhabit it socially.

It’s exciting, a little bit eerie, and undeniably cool.

I’m curious to hear your thoughts on this: If a robot could look you in the eye and speak with perfect emotional mimicry, would you feel more connected to it, or would it just creep you out even more?